Digitization of Conduct Monitoring and Controls

September 2025

Common observations of the Insurance Authority

Ask any member of the public what they envision when they think of the Hong Kong insurance industry, and they probably conjure up an image of an insurance agent in a coffee shop, swiping through insurance options on his tablet.

Yes, the tablet has fast become an essential tool for conducting insurance business, enabling agents to generate suggested insurance recommendations and submit policy applications in real-time with the customer present.

Behind the simplicity of each agent’s screen, however, lies the insurer’s digitized sales system - a complex collection of multi-layered processes, mapping, parameters, interfaces and database feeds – designed, tested and rolled out under the label of “transformation”. Anyone who has been involved in a transformation project – probably every staff member of an insurer over the past decade – will know the multitude of pain points that come with trying to implement new digitized sales systems. Digital transformation is far from simple, yet over the past few years many insurers have (painfully) embraced its necessity.

As insurance processes have become increasingly digitized, the conduct inspectors of Insurance Authority (“IA”) have had to adapt their inspection approach. A common part of any conduct inspection nowadays incorporates an evaluation of the effectiveness of embedded system controls, processes and monitoring, and the degree to which these achieve compliance with core regulatory conduct requirements.

During our inspections we have observed certain common pitfalls in system designs and implementation that can lead to gaps and, potentially, non-compliant sales practices. In this article we share these pitfalls and offer suggestions on how these can be remedied during system reviews, as well as strategies to avoid them in future designs and implementations.

Welcome to the future of insurance!

Let’s take a hypothetical example for the purpose of illustration.

A life insurer rolls out an electronic point-of-sale system called “Sell+” for use by its tied agency force. Sell+ is a device-accessed platform that empowers agents with the functionality to input information about a customer’s circumstances and generate a list of product options tailored to those circumstances. The agent can select from these to present a recommendation to the customer. If the customer wishes to proceed with the purchase, Sell+ can also be used to submit the policy application.

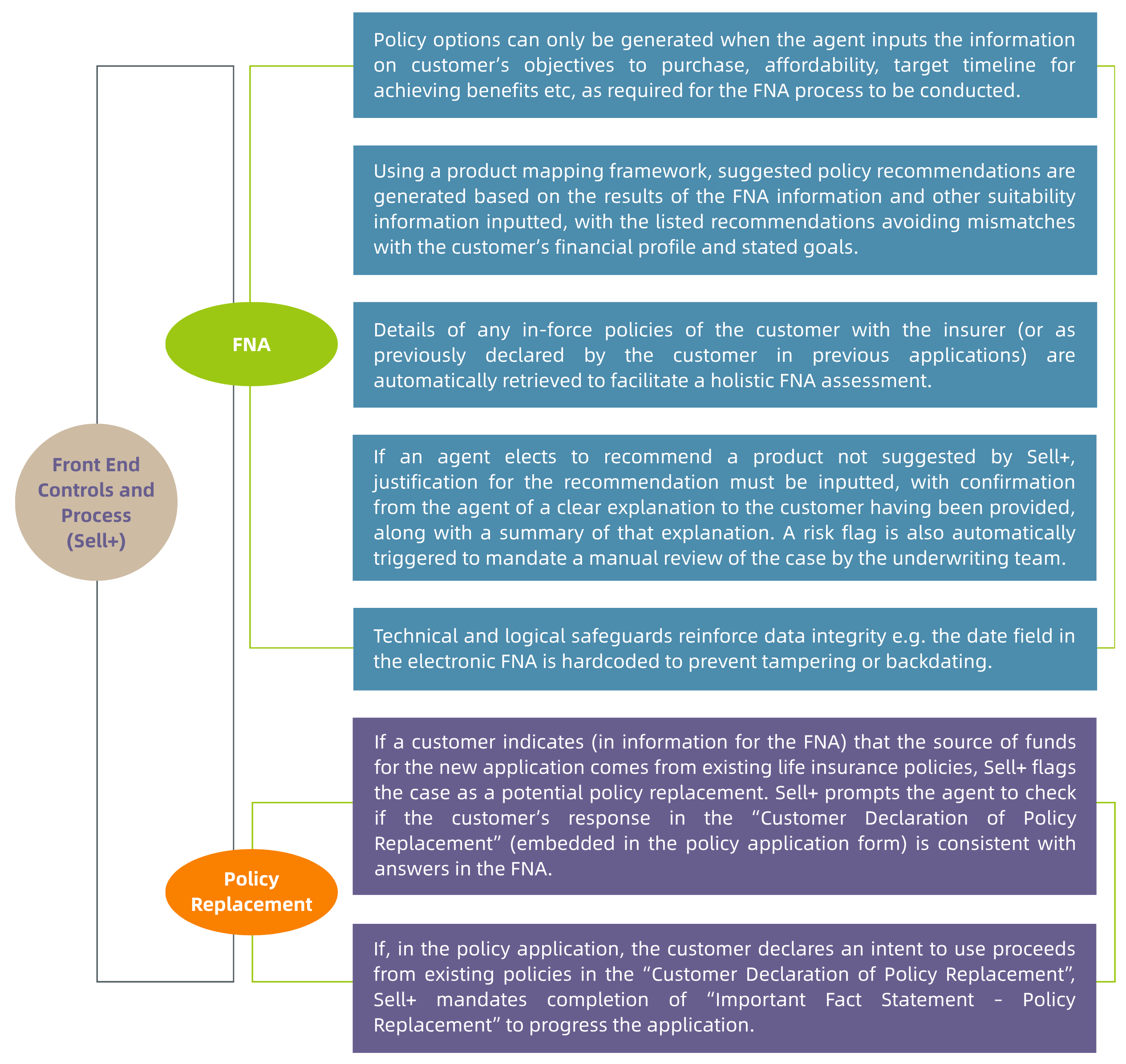

Sell+ incorporates key processes, controls and monitoring to ensure compliance with core regulatory obligations. To generate potential recommendations, the minimum information inputted must include answers from the customer to enable the financial needs analysis (“FNA”) to be carried out (per the IA’s Guideline on Financial Needs Analysis (GL30)), ensuring a proper suitability assessment is conducted. Processes and prompts are also embedded to ensure potential policy replacement situations are identified and that the right advice and information is given to customers so they understand the potential adverse consequences that come with replacement, enabling fully informed decisions to be made (per the IA’s Guideline on Long Term Insurance Policy Replacement (GL27)).

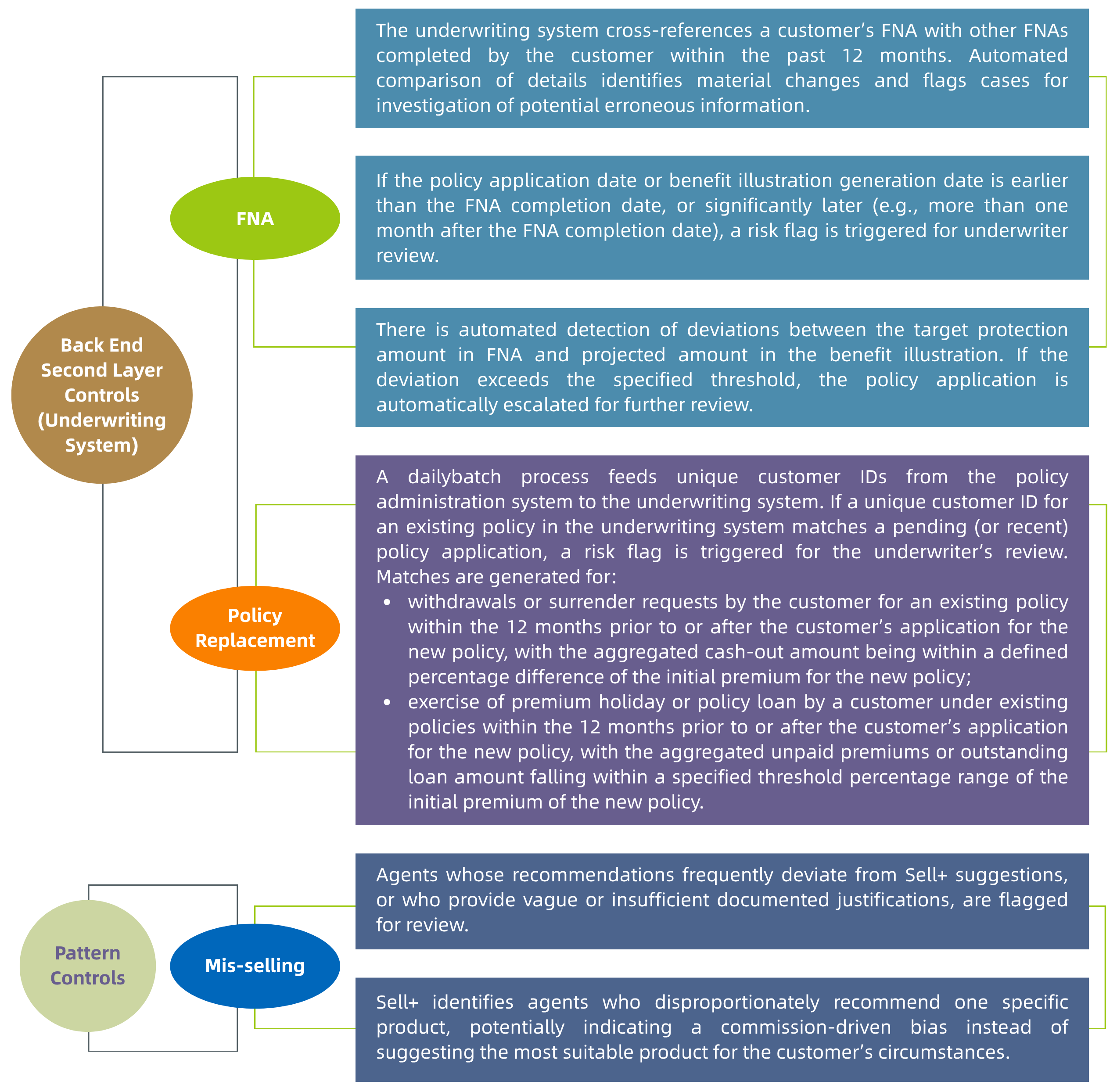

Sell+ integrates the front-end application utilized by the agents, with the insurer’s back-end underwriting systems and feeds from various data repositories of the insurer. This enables validation and monitoring controls for the FNA process and policy replacements to be applied across multiple layers in the insurer’s operation. The underwriting controls and processes provide a second layer of review. A third layer of controls and processes is provided through pattern analysis functionality in Sell+ that identifies patterns utilizing a holistic view of all historic applications submitted by an agent that can trigger red-flags for potential mis-selling.

These validation controls and processes are summarized in the table below.

Observations on Common Shortcomings in Design and Implementation

The automated controls and processes in a system like Sell+, as described above, can bring obvious enhanced compliance and efficiency benefits to an insurer. The elimination of multiple manual data re-entry processes across un-joined up systems reduces the risk of human error, and enables information across all systems to be utilized to meet regulatory requirements and identify patterns denoting potential non-compliances.

Implementing a new system like Sell+, through a digital transformation project, is a considerably challenging undertaking for any insurer. When adopting new systems, traditional insurers do not start with a blank slate. They have to work out how to integrate the new system with existing systems and operations, which are often replete with workarounds and bespoke tweaks implemented over numerous years. It is unlikely that any single individual will have a holistic, in-depth view and understanding of everything – or the skill-set to appreciate how the operation of the new system, particularly when it interfaces with the plethora of existing systems, will react so as to produce the desired outputs and outcomes. No one in insurance (or any industry) is this superhuman!

The project therefore requires input from all business units. This diversity of input and review is necessary to ensure a holistic view of all matters and risks is obtained. Where implementation takes place without this fully diverse holistic viewpoint and deep understanding, gaps will be inevitable and the new system will prove to be inadequate to prevent the non-compliances it is intended to eliminate.

The following is a collection of the types of problems the IA might find, when examining a system like Sell+, in a conduct inspection, which has not been implemented with the holistic and comprehensive view described:

1. Insufficient testing of system logic

When a customer declares multiple objectives for purchasing a policy in the FNA process, the logic in the system maps available products against any of the declared objectives, rather than selecting products which meet all the declared objectives of the customer. The result is a list of recommended products, none of which individually satisfies all the objectives identified, whilst the product that does meet all selected objectives is not included on the list. If the agent recommends a single product from the list based on this flawed system logic, it will not be the product that best matches the customer’s interests. The servicing agent may recognize this, but cannot manually bypass the recommendations from Sell+, without it triggering an erroneous red-flag for potential mis-selling, leading to unnecessary review from the underwriter and creating workload for no reason.

Areas of Improvements:

This shortcoming would stem from a lack of understanding of the insurance sales process by those responsible for designing the system logic, resulting in an inability to foresee this result. This is not that unusual, given that system developers may not be insurance personnel. However, this type of problem should have been identified in the user acceptance testing (UAT) phase for the system. If the UAT had been carried out using a wide enough range of common real-life insurance application examples, this issue could have been identified and rectified before full implementation. UAT is a critical process and it is imperative that business units (especially the personnel using the system in conducting sales) are fully engaged in that process to throw multiple real-life scenarios at the system in a quasi-production environment to test its limits and flush out problems.

2. Inadequate Data Management

Problems with the implementation of Sell+ might also come from inadequate data management. Examples are as follows:

i. Data Accessibility:

A system like Sell+ interfaces with back end underwriting systems, which themselves draw on data repository systems. Over the years, several data repository systems may have been developed, with new ones going online to replace the old ones, but with the old ones retained for policies underwritten prior to that date. In the rollout of a new system like Sell+, integration with the underwriting systems might not be able to be completely joined up with the older repository systems. This would lead to policy replacement identification and FNA affordability assessments (based on existing policyholder information held by the insurer) in Sell+ potentially being incomplete.

ii. Data Inaccuracy:

It was some years ago now that insurers moved to adopting Optical Character Recognition (OCR) technology to capture data from paper applications. However, if the data captured by the OCR was not verified properly at the time, this could have resulted in inaccuracies in the data capture, which in turn will now reduce the effectiveness of the functionality in Sell+ to identify red flags for FNA purposes, policy replacement and patterns of potential mis-selling.

iii. Multiple Customer IDs:

Over the years, the insurer may have used different unique ID mechanisms for identifying customers in different systems deployed. This can result in the same customer being identified with multiple different unique IDs. An insurer’s automated controls rely on unique IDs to aggregate transactions for the same customer and detect unusual patterns. Without ongoing data cleansing to ensure a customer is only identified with a single unique ID (so that it is truly unique), the effectiveness of a system like Sell+ will be reduced or even compromised, as it will not be able to produce the holistic view of a customer which is intended.

iv. Inconsistent Data Format:

If the insurer has created a centralized data repository that integrates data feeds from multiple systems, where the data formats for each integrated system differ due to inconsistent data entry practices across these systems, this will hinder the effectiveness of Sell+ if its functionality relies on the centralized data repository. Detection of potential policy replacement situations, for example, relies on analyzing previous policy application dates and calculating the time gap to the current policy application. Differences in date formats can therefore have a real limiting effect on the automated controls being deployed.

v. Incorrect Data mapping:

Mismatches in data field definitions across systems which need to interface can result in failed data mapping. For example, if the “Policy Application Date” in one system refers to the date the customer signed the policy application, but in another system refers to the date the application is fed into that system, the discrepancy – even though it may only be a few days – can result in problems in triggering crucial red-flags, for example, whether the policy application date is earlier than the FNA date.

vi. Incomplete data transfer:

Faulty logic for extracting data from a particular system can result in data being missed which needs to be captured to ensure controls are effectively executed. For example, if the logic is designed to extract only customer IDs tied to “in-force” policies whilst excluding terminated or surrendered cases, this could inhibit identification of potential policy replacement situations.

Areas of Improvements:

One root cause of these issues is the build-up over years of different ways to record and store data across different systems, without sufficient steps being taken to reconcile it all to keep it consistent. The longer this goes unreconciled, however, the more difficult remediation becomes. When a new system like Sell+ is rolled out, the discrepancies inevitably result in gaps, reducing the new system’s effectiveness and translating into sales practice non-compliances.

These issues highlight the need and value of regular audits, reconciliation protocols, and quality checks during migrations and upgrades of systems. Another solution is for historical data to be fully transformed and integrated into centralized repositories or made accessible in real time through APIs or middleware (another transformation project which has challenges, but may be worth it to enable digitization). Employee training on data quality and governance should also be prioritized to ensure sustainable improvements in system accuracy and reliability.

3. Calibration of parameters

As indicated, the monitoring rules for detecting potential policy replacement behavior in Sell+ triggers red-flags where the aggregate surrender or withdrawal value from existing policies identified falls within a threshold percentage range of the initial premium for the new policy application. The insurer may calibrate this percentage range so as to find a balance between capturing red flag situations comprehensively, whilst not producing too many false positives. Our experience suggests that in making these judgements, too much leeway is given to false positive avoidance, to the detriment of comprehensiveness, leading to real policy replacement situations going undetected.

Areas of Improvements:

An insurer should base such parameters on historical data and actual trends from recent policy replacement cases to ensure its parameters have a sound basis. This will result in thresholds being calibrated to the actual risks of non-compliance (whilst minimizing false positives). A formal approval process for parameter adjustments should be followed with justification being documented using thorough analysis, and sign-off from relevant stakeholders being obtained. Maintaining an audit log to track all parameter amendments, including details of when, why, and by whom changes were made, will further enhance accountability and transparency.

Parameter updates should also undergo thorough testing to ensure they generate accurate alerts. Periodic reviews of the monitoring rules’ overall performance should be conducted, incorporating metrics like alert accuracy, false positive rates, comments from legal and compliance, and from underwriters. These measures will ensure the parameter-based validations and monitoring remains effective, efficient, and aligned with evolving risks and business needs.

Wrap up

The case study in this article highlights common observations made by the IA on insurers in its inspections, regarding automated controls and processes in digitized sales and related systems. Automation of compliance controls can be of considerable benefit to an insurer. At the same time, if the system controls are not properly designed, tested, implemented and managed, not only may those benefits be lost, but the resulting gaps could exacerbate exposures to non-compliance, poor policyholder outcomes, and unfair treatment.

Effective digitization through transformation projects depends on precise and careful implementation, robust governance, and accountability that takes account of the full spectrum of views needed to obtain a holistic and deep understanding of the operations and systems impacted. Comprehensive UAT testing and continuous refinement are also vital. Issues like data quality, system logic accuracy, and parameter calibration must be addressed to ensure automated controls function as intended to meet regulatory expectations.

Insurers (and those managing them) need to be realistic about time-tabling and allocate adequate resources for digitization projects. A properly time-tabled plan for design, testing and rollout, with the project being adequately resourced, can result in faster achievement of the desired outcome, rather than cutting corners and rushing to meet quarterly deadlines, only to have to spend more money later to remediate. A more comprehensive and planned approach, subject to robust implementation governance, will also ultimately help build trust and confidence from customers in the systems being deployed, and thereby foster sustainable growth and integrity across the entire insurance sector.